Log4Net supports writing to multiple sources through the use of “Appenders”. For this post, we will take a look at the AdoNetAppender which allows us to easily write logs to a database table. To begin lets create a new MVC project.

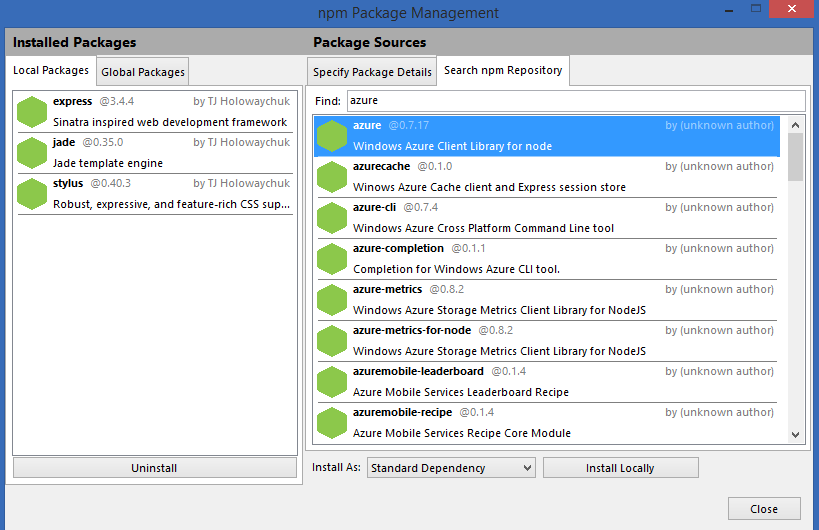

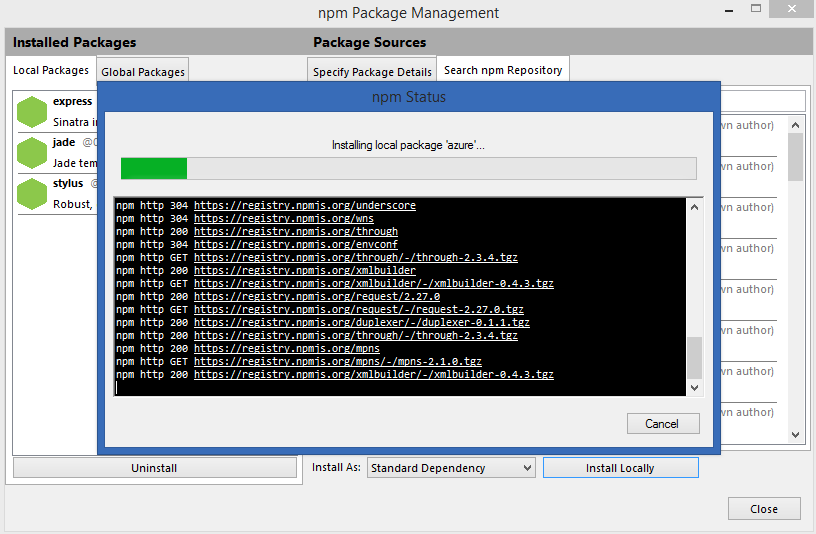

Next we add a reference to Log4Net using the NuGet package manager.

To continue, add a new XML file to your solution, name it Log4Net.config and then copy the following code into the new file. http://pastie.org/6118426 The AdoNetAppender can get tricky because it has a few options that usually go undiscussed. The first tricky part to note here is the “bufferSize” attribute which when set to 0 indicates not to use buffering. Once we complete the Log4Net configuration we will see that logging data to the table is very easy and that it will not be extraordinary to log more than 10 messages for each page request depending on the amount of work a page processes. To mitigate the impact increased database traffic would have on performance the AdoNetAppender allows us to batch these inserts into the log table. However, I recommend leaving this setting at 0 until you notice a performance impact. I consider this the first hiccup for installing Log4Net as some examples I have seen set this value to 10 and then skip discussing this piece. This usually makes it look like logging is not working when we run a simple logging test with one example message because only after we have 10 messages will the entire buffer be flushed and written to the database. Another core feature of Log4Net is the ability to configure your log level. Log4Net captures information in prioritized categories.

- Fatal

- Error

- Warn

- Info

- Debug

In our example we have configured the Appender to capture information at the INFO level. Unless we change this setting our messages created under the DEBUG category will not get saved to the database. However, we will still see the more severe categories of FATAL, ERROR, and WARN in addition to INFO. By using this priority of severity I find most projects do not need the buffering option and are instead deployed with a WARN or ERROR level setting while the DEBUG and INFO settings are reserved for developer workstations and QA servers not being load tested. Another hiccup I usually see is found in the insert statement itself. Make sure your insert command matches the log table you created. For our example we will use the following SQL to create a log table.

The last piece to discuss is the connection string which I intentionally left as “{auto}” in our example. By default this configuration file is not loaded by the application and as such our logging is not yet enabled. Also, in most scenarios, we want to use the same database connection string throughout the application. To accomplish both of these goals I created the following helper classhttp://pastie.org/6118219 After copying this class into your project simply call the public method “InitializeLog4Net” during the “Application_Start” event of your Global.asax.cs class. The Log4NetManager class will load our Log4Net.config file and the Appender configuration it contains. Next it will find the AdoNetAppender settings we just loaded and override the connection string (if it is set to “{auto}”) with the connection string specified for our entity model which takes care of keeping our two connection strings synchronized! At this point, Log4Net is configured and ready to run. All we have to do now is create some log entries.

To create our log entries we need an instance of a logger. The typical usage scenario is to get a logger that represents the class you are working in, as we do here using the typeof operator. After that we can create error messages by category. In this example “logger.Debug” will be ignored by the logger because we set the log level to INFO. In the second example we insert our test message “HELLO WORLD” and then finally we catch and report an exception message that occurred from an InvalidOperationException. Now, each time the Home/Index action runs it will insert two new records into the Log4Net table in the Northwind database as configured by the our entity connection string.  via: http://www.oakwoodinsights.com/adding-log4net-mvc-site/

via: http://www.oakwoodinsights.com/adding-log4net-mvc-site/